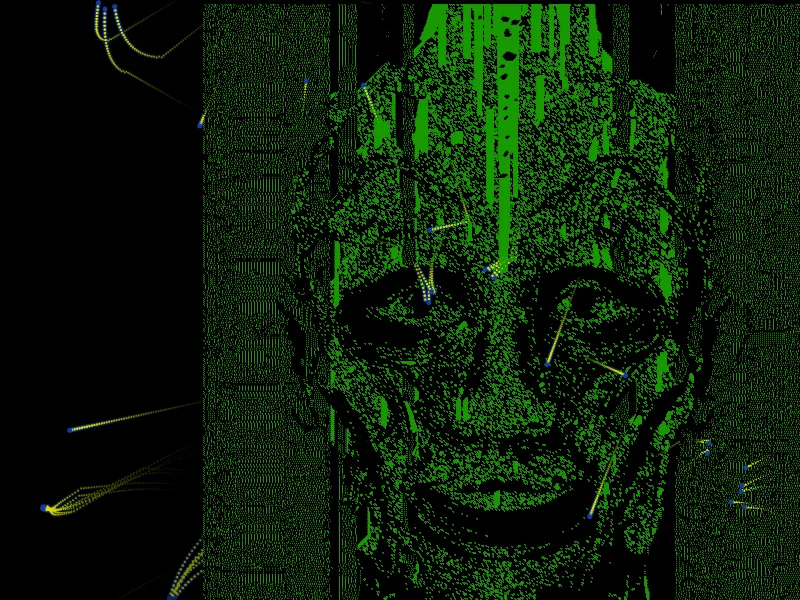

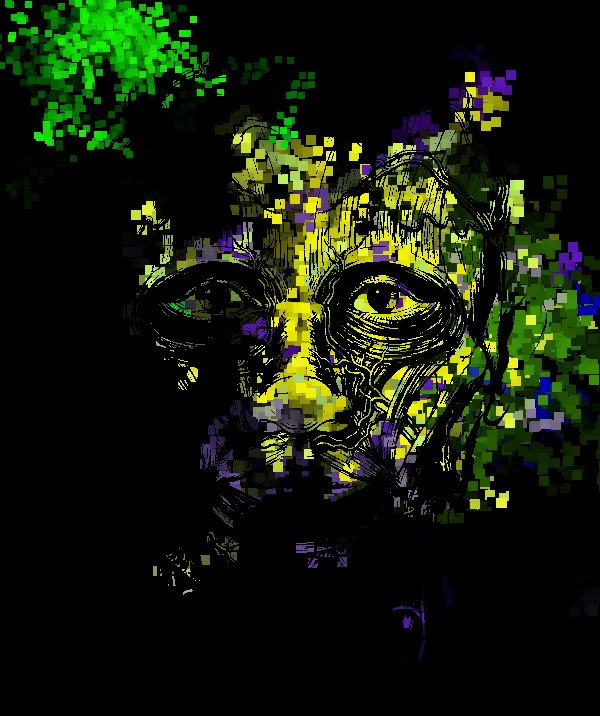

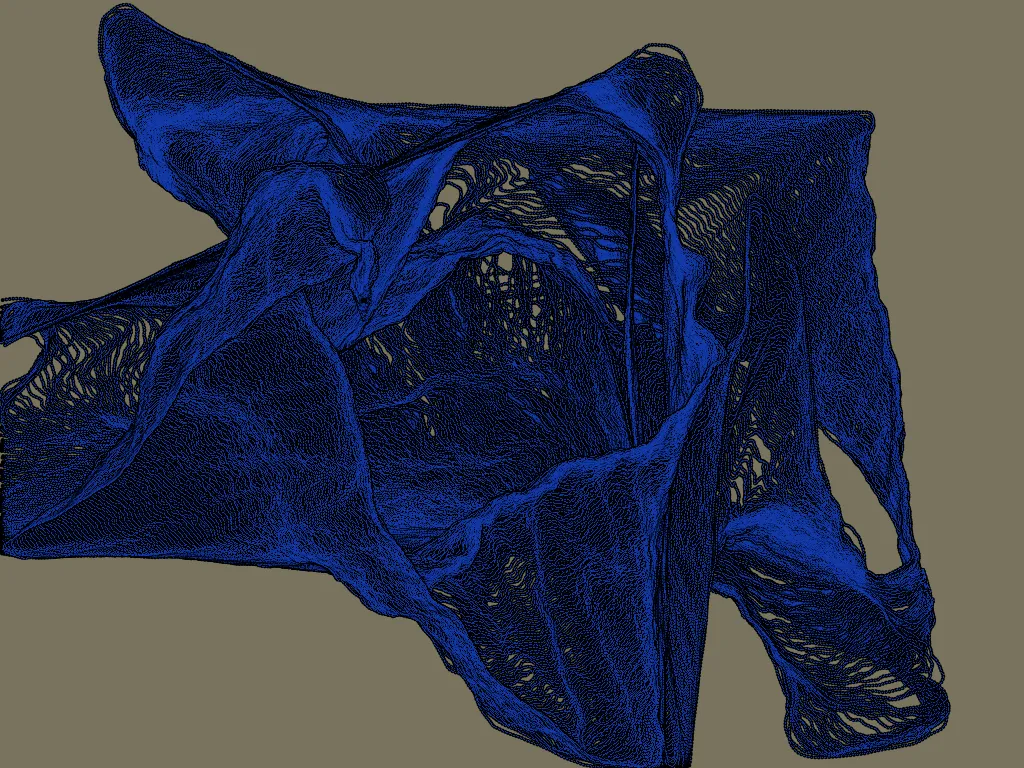

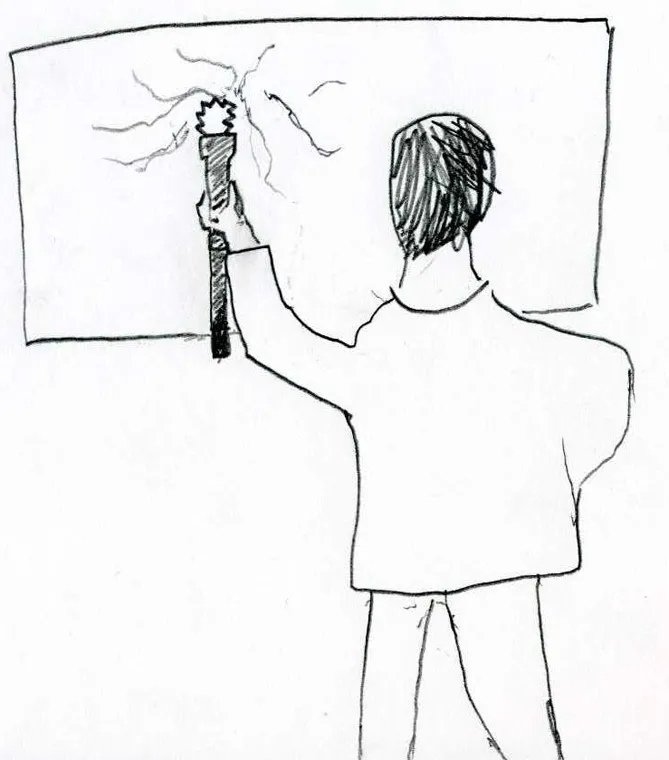

College projects

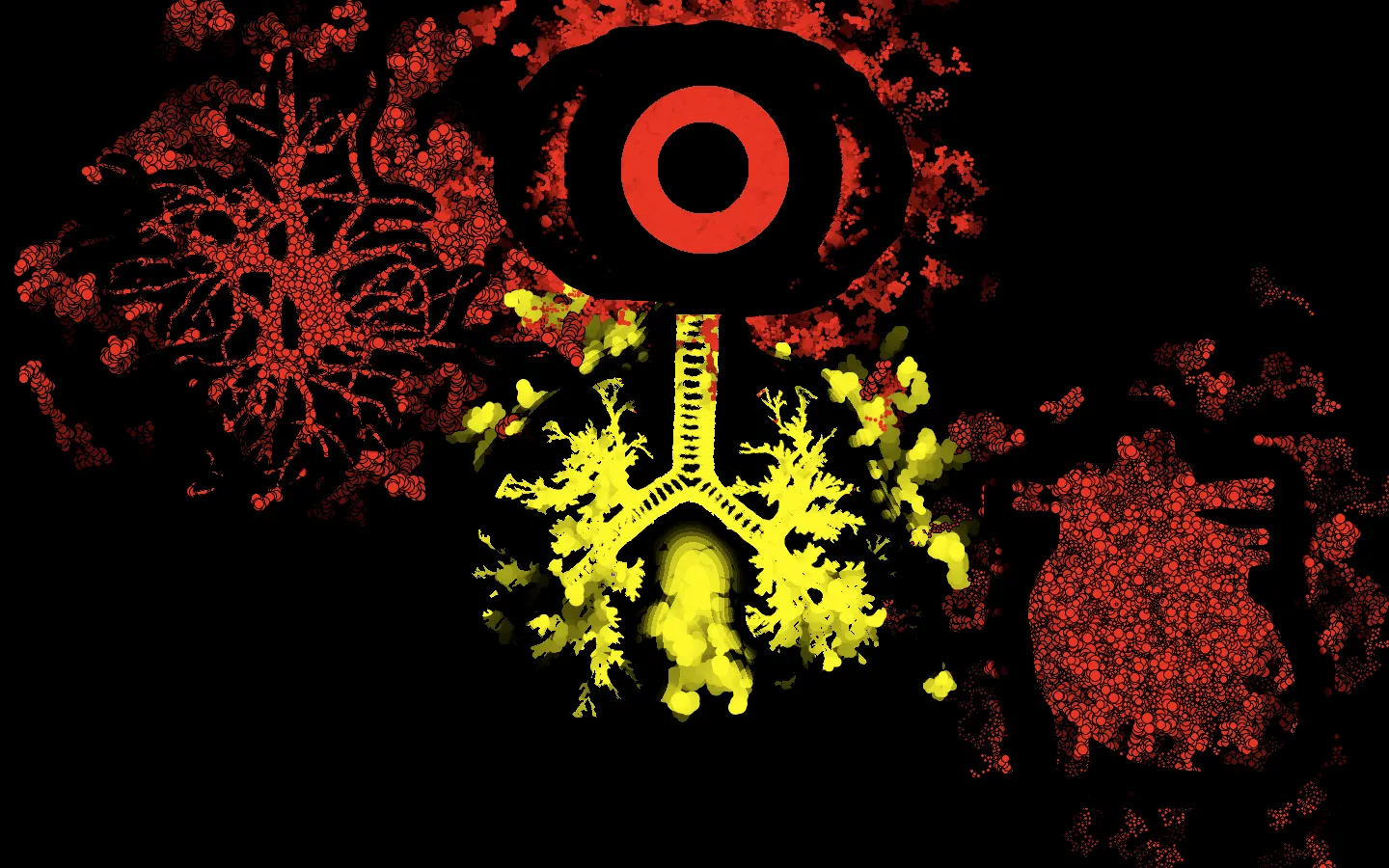

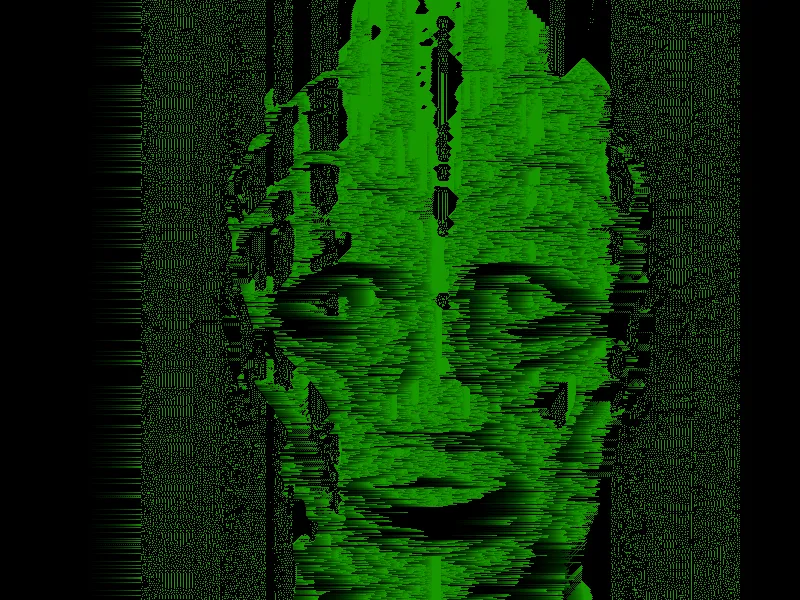

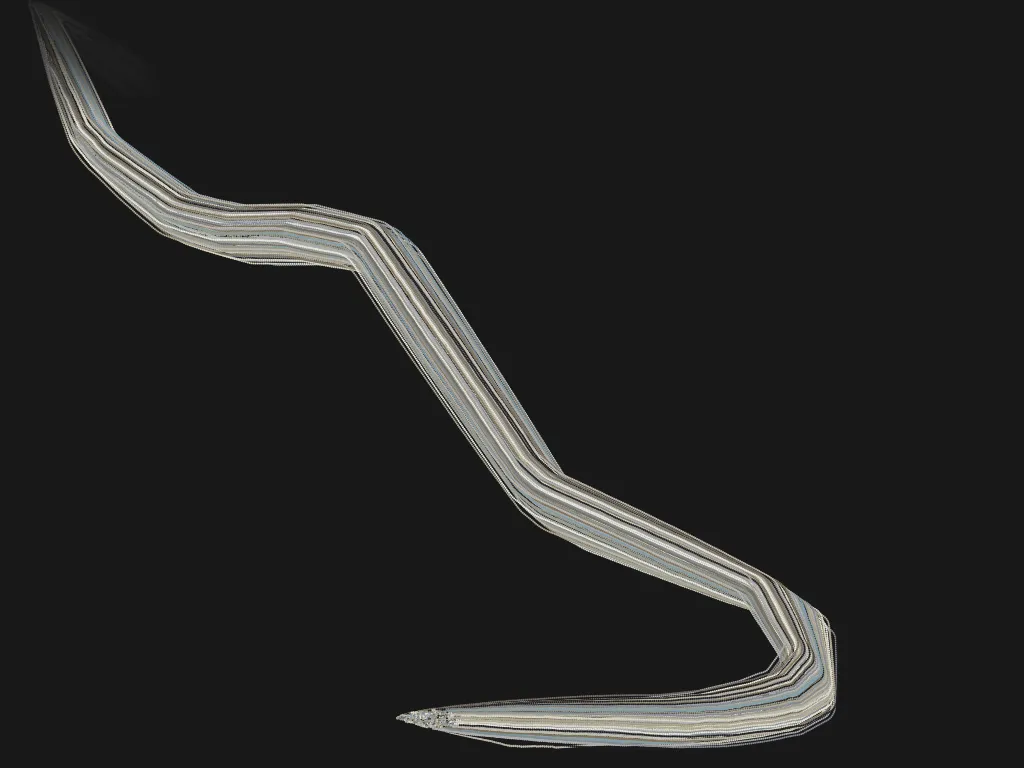

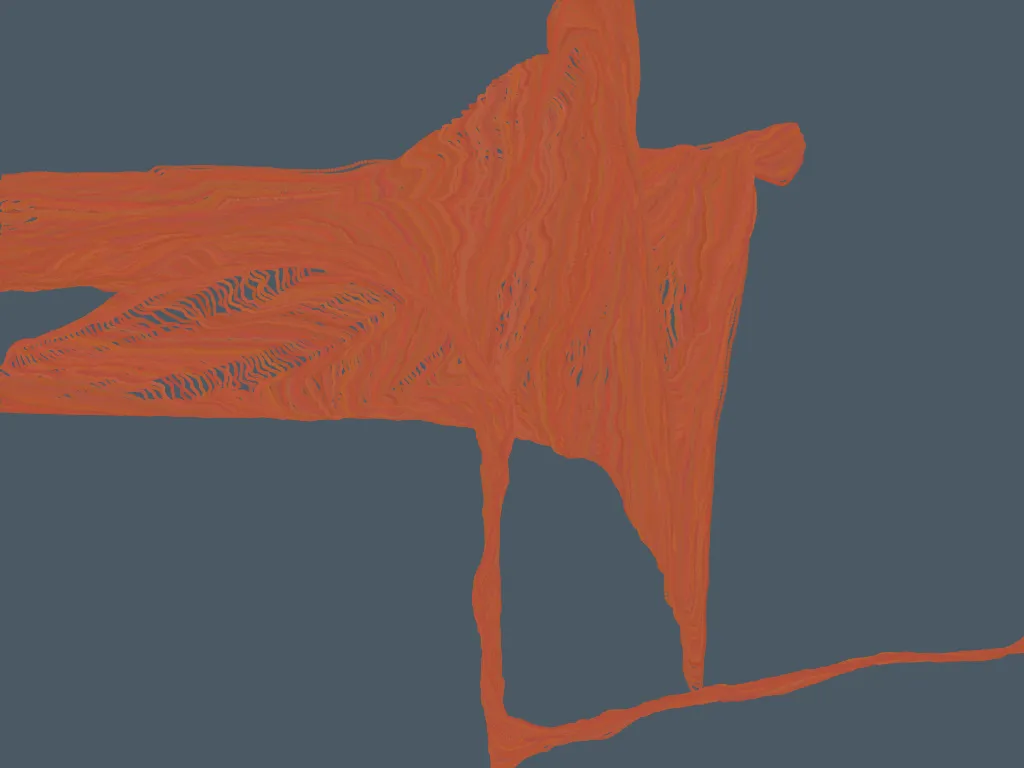

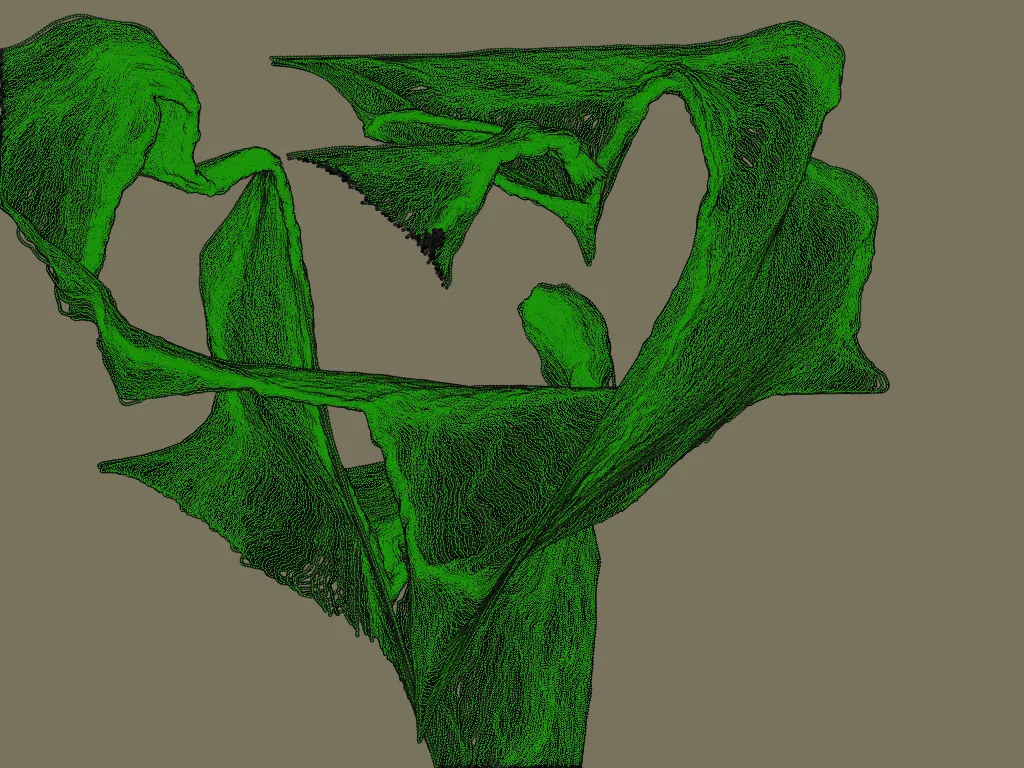

Cellular automata explorations, etc

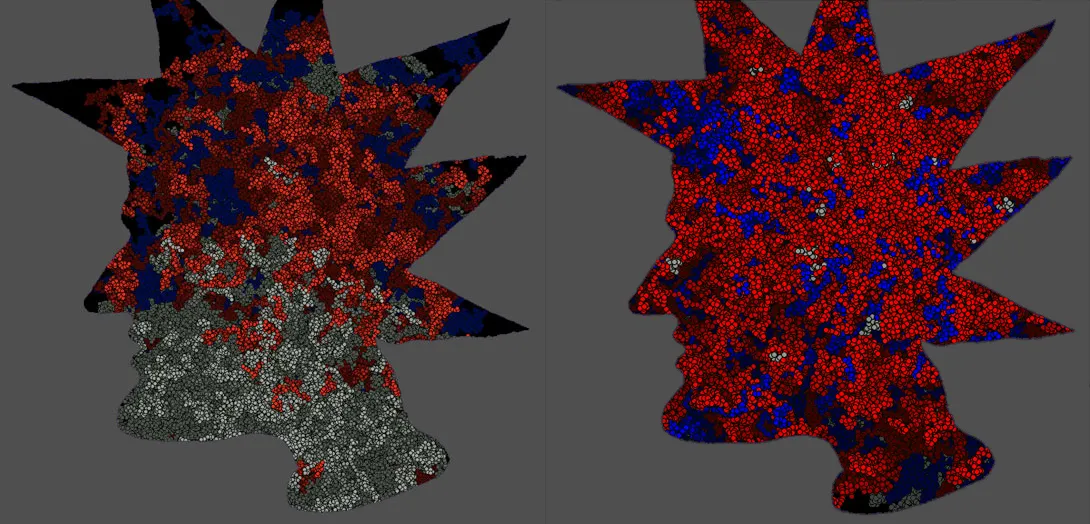

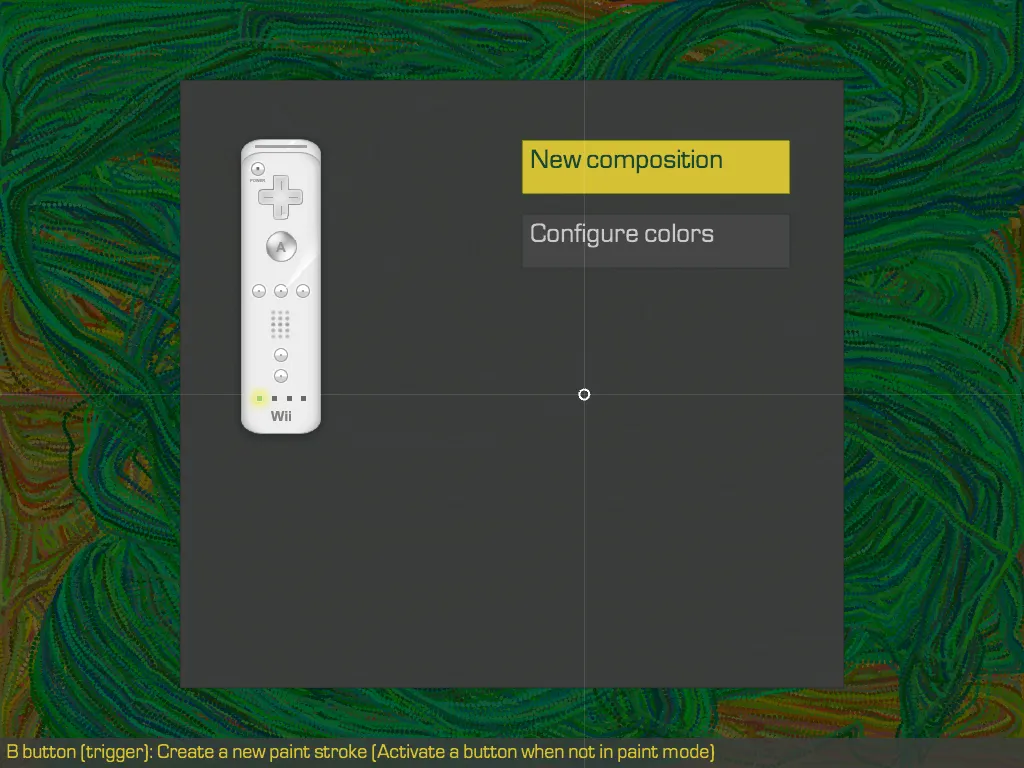

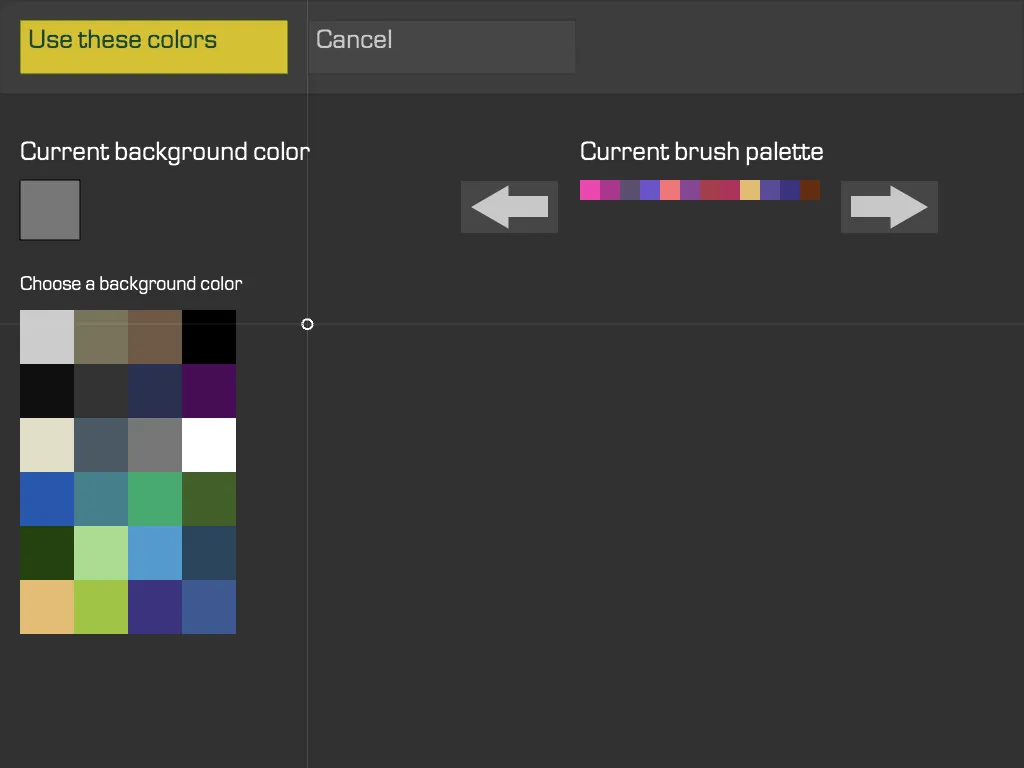

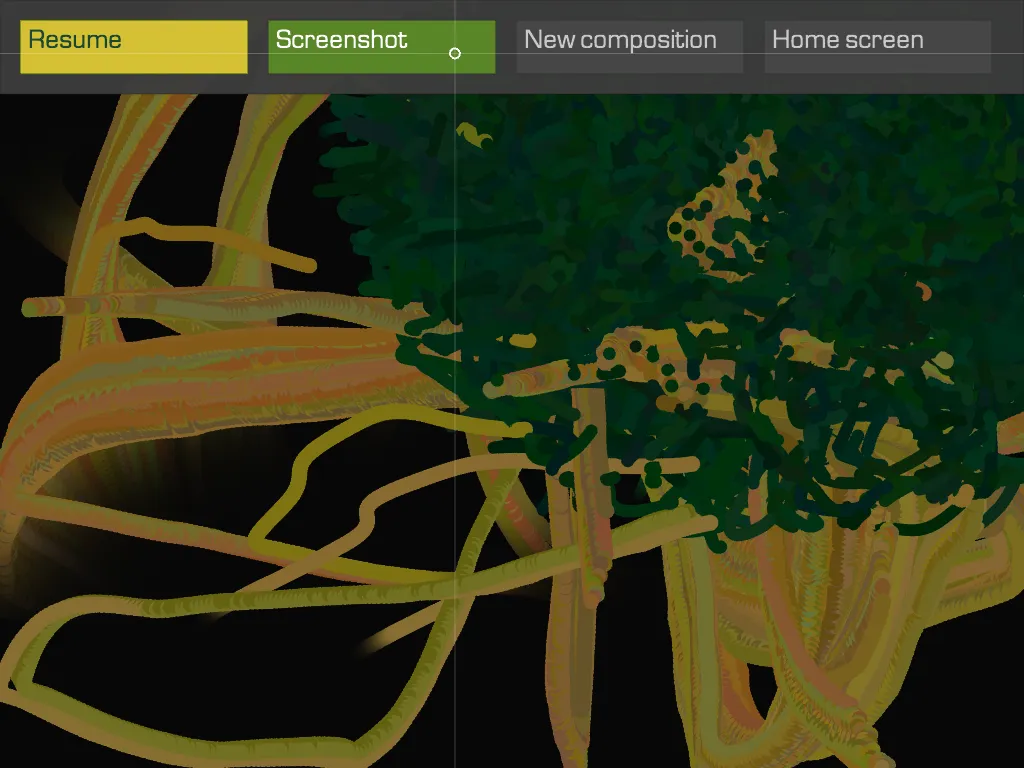

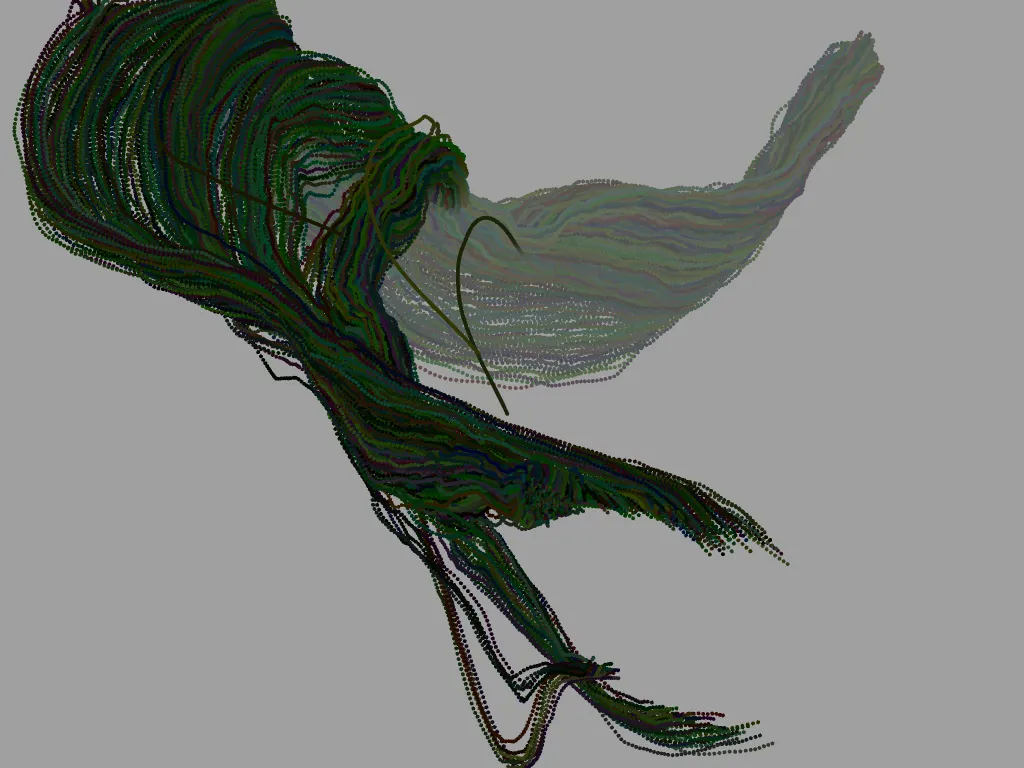

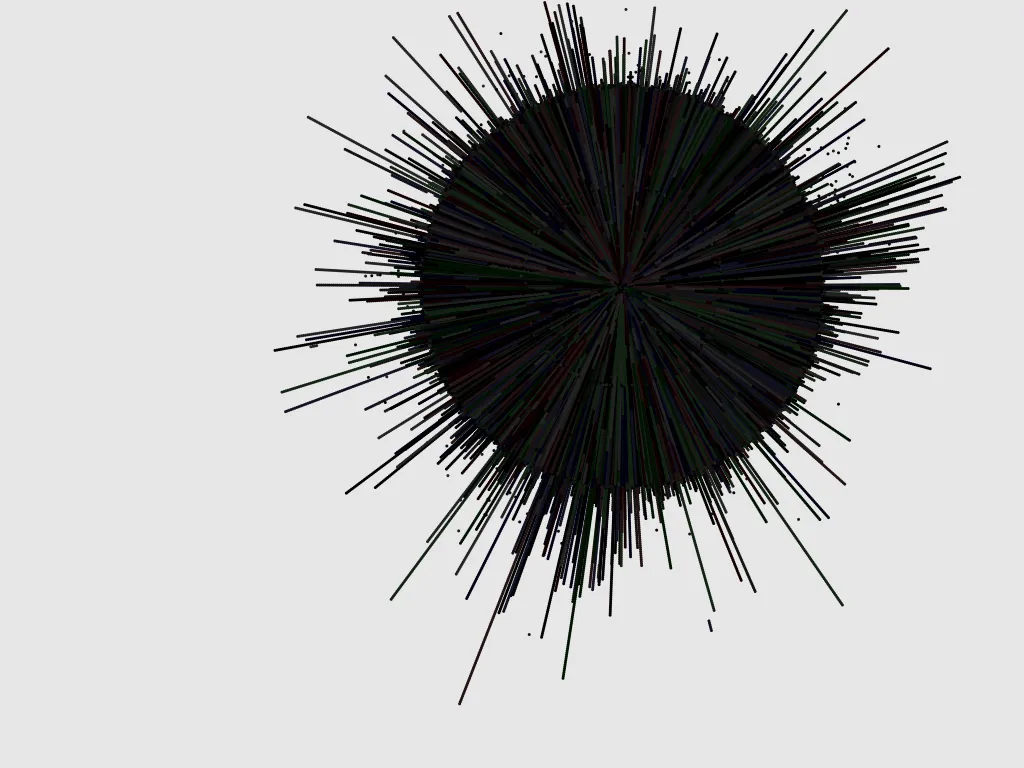

WildGarden

A footnote

In retrospect, I didn't do a great job documenting and preserving much of my work in college. Notably, most of my programming projects from this time are actually interactive, and the visuals are animated or otherwise dynamic. The artifacts I have are mainly still images taken of visuals produced with these projects, and, in the case of my thesis project, the original source code (which is painful to look at now).